You’ve realized that your backend was too slow? That it hurts your performances? Alright, this is a common Webperf issue. In this article I will try to guide you through identifying your issues while giving you some hints on how to fix them.

How slow is too slow? My advice is never to have a Time to First Byte (measured on WebPageTest with a “Cable” connection) greater than 600 milliseconds, network included. If performance really matters to you, try to get under 300ms.

Note: I wrote this article for readers with an intermediate technical knowledge. However, even if you are not into technical things, the part about hardware could be of some interest.

First, don’t rush immediately on a more powerful server. A faster machine surely can help, but most of the time the slowness resides on the software side: a heavy code, some requests toward an external API, a database that does not scale… and many more things.

Now I will tell you a secret: most of today’s websites are very slow. But… they cheat!

External caching to the rescue

How do they cheat? They rely on a strong caching strategy.

The server you are reading from is a good old (and slow) WordPress that can’t respond to any request in less than 500ms. But it is actually hidden behind an external cache that responds much faster than the backend would do.

So what is an external cache? It is a memory that stores entire pages, so that they are served almost instantly the next time a visitor asks them.

You can build your own external cache by creating a “reverse proxy” server. The best known free proxies are:

Or you can pay for a CDN – Content Delivery Network -– which will act as a “supercache”. It brings the cache geographically closer to your users thanks to a word-wide network. I recommend using one in case some of your customers are more than 3 000 kilometers / 2 000 miles away from your server. It will also protect your website from DDoS attacks.

My favorite CDN services are:

- KeyCDN (get 10US$ free credits from my link)

- CloudFlare (they have a free offer for small non-profit websites)

Caching has one drawback: it limits real time changes. Let’s say you have set up a cache with a TTL (Time To Live) of 2 hours. Any change you make on a page can take up to 2 hours to go live. You will have to find a good balance between your need for reactivity and the number of users that will hit an expired cache.

Caching can also be touchy in some cases:

- if the information really needs to stay second-fresh,

- if the page content is personalized,

- if most of your users are logged in,

- if the shopping basket HTML code is generated by the backend,

- …

What about internal caching?

Caching is not only useful for final HTML files, it can also help to accelerate the backend’s language by saving bits of data.

A few internal caching examples:

- If every single page asks your database what is the number of happy customers since the store creation, then you should cache the response to skip this heavy database request.

- If your navigation menu is complex and generated from a dozen of database requests, then cache the HTML output.

- If your application requests an external API for minute-fresh currency exchange rates, then cache the response too, even if it is for a minute.

Once you start caching, you can’t stop because it is damn easy. Remember to create a “cache free” mode for debugging purpose, otherwise it can become a nightmare.

Last but not least, make sure your internal cache is fast. The most important speed factor is where it physically stores the data. For example, the default cache in the Drupal CMS saves into the database. It is better than nothing, but reading from a database is 10 times slower than reading from a disk, which is itself 10 times slower than from memory.

I personally use Memcached on my projects, a sober, efficient and open-source caching daemon that saves in memory. You can also give a try to Redis, which is a bit slower but with much more features (structured data storage, hard-drive persistence, security…). Both have connectors for almost every backend language and framework.

Profilers know everything about your backend

Roll up your sleeves, put your helmet on, we will now enter the machine!

A profiler is a tool that instrumentalizes your backend language to track and measure almost everything. It will point you the slowest functions, components, database queries, external queries. It sometimes even tells you if some obscure server settings are done wrong.

If you have a test machine similar to the production one, go for it to avoid disturbing your website. If you do it on the production server, don’t forget to disable the profiler when you are done optimizing, because it adds a tiny overhead we really don’t need.

Note: if your website is hosted on a shared server, you probably won’t be allowed to “enter the machine”. You can try profiling your app on a local server or you can scroll directly to the hardware chapter.

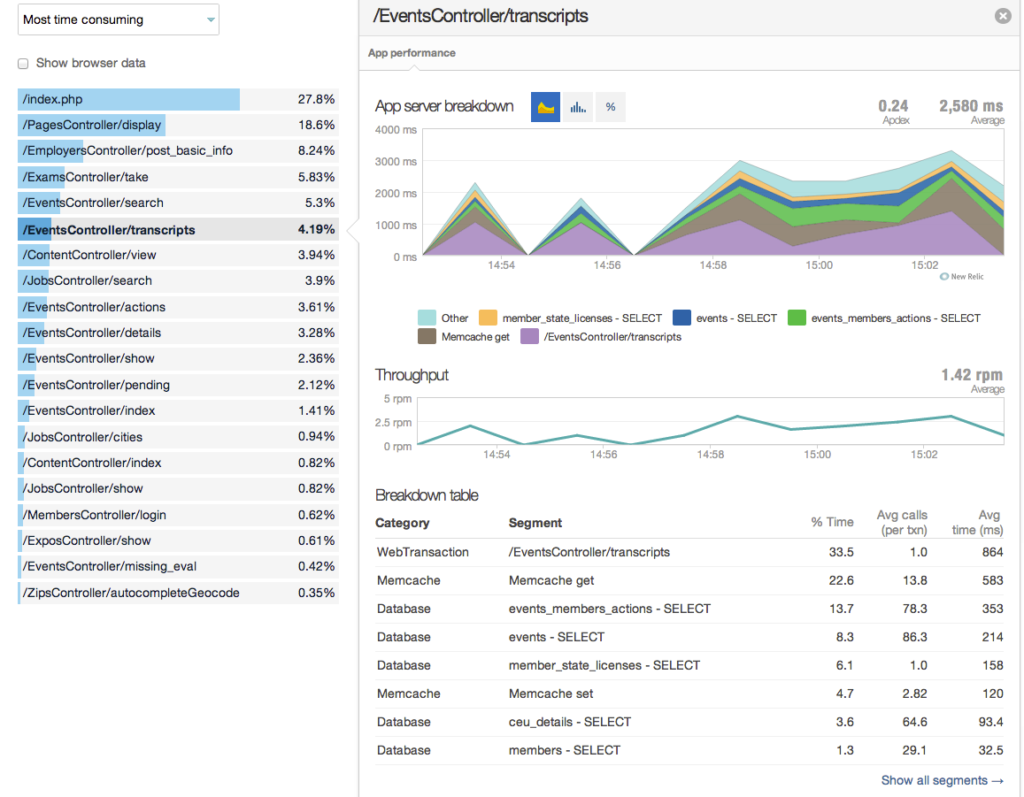

The best known player in the profiling industry is New Relic. It handles dozens of languages and can do almost anything. I suggest subscribing to its 30 days free trial, which lets enough time to diagnose problems and fix them.

In the PHP world, BlackFire does a good job (15 days free trial). There is also Tideways that I haven’t tested (30 days free trial).

JProfiler is quite popular for Java. Ruby’s Rack Mini Profiler looks interesting. Python includes its own profiler called cProfile, Node.js too.

The database is too slow, what to do?

If the profiler shows your database as a bottleneck, pay attention to:

- the average number of requests to create a page: reduce it at all cost (internal caching is your friend).

- the slowest requests: try to rewrite them for speed or change your tables’ indexes.

- multiple requests on the same table: try to group them into a single big bulk update.

You can also find database profiling tools. Sometimes, they are included by default into the software, such as the Database Profiler in MongoDB or the slow query log option in MySQL. The all-in-one New Relic solution also includes it in the package.

External requests are too slow, what to do?

Requests to another server – such as an API – during a page creation should turn on a big red light warning. Very few things can hurt more your backend performances. Here are some tips if you face this problem:

- reduce their number urgently (internal caching is your friend more than ever).

- try to parallelize them.

- check how fast the other server responds and whether it is hosted on another continent.

- if it is a “writing” request, send it to a locally installed queuing service such as RabbitMQ, and build a small app to handle the transfer asynchronously.

The backend code is too slow, what to do?

Most of the time, this is due to a heavy framework and you can’t do much. But I can still give you some tips:

- The profiler will point you to the slowest functions, try to rewrite them. If you don’t understand which part of a function is slow, split it.

- If you see a slow loop, try to simplify it. Check if you can extract some code from it.

- Logging can be slow too, when intensively used. Try to replace the default logger with a faster one.

- If you use PHP, check which version you run. The recent versions are getting faster and faster.

If everything else fails, let’s strengthen the hardware!

First things first, choose a server geographically close to the majority of your users, even if you use a CDN. The CDN will not magically fasten the distance penalty for uncached resources. Now let’s talk about server strength.

If your website is hosted on a shared server, I am not surprised it is slow. As it is a complete black box, you can’t get any insights to find the performance bottleneck. This is part of the provider’s strategy, so you will be inclined to switch to an upper plan… but it will still be a black box.

You’ve guessed it right, I don’t like most shared hosting companies. If I were you I would switch to a dedicated server managed by myself. But you are probably not fluent in web server configuration.

So my advice would be: choose a hosting provider that really cares about performance:

- for WordPress or WooCommerce websites I would recommend Kinsta or Pressidium, expensive (respectively $30 and $42 per month), but really, really fast with no bullshit.

- for others PHP-based technologies – such as Magento, Laravel, Drupal, Prestashop or Joomla – my searches led me to Cloudways.

If you own a virtual or a dedicated server, you will be able to detect the hardware bottleneck. First, take a look at the type of hard drive. SSD drives are 3X faster than HDD drives and much less expensive than they used to be a few years ago, so it is a no-brainer.

Then, check processor and memory usage with a free tool such as “top” or “htop”. If your processor’s cores often hits 100%, it is not a good sign. Try to choose a machine with more cores or stronger cores. If the memory caps, add more RAM. It is that simple.

Virtual server or dedicated server? I personally prefer bare-metal dedicated servers. They are faster and not subject to the “noisy neighbor effect”. But they are also twice as expensive.

If you want to migrate your website to another hosting provider, the best value for money dedicated servers I know – with SSD drives of course – are:

- OVH: they rent good dedicated servers starting at 81$ / 62€ per month, and you can choose among several locations around the world. The downside is their basic customer service, which tends to charge additional services.

- LiquidWeb (US only): they are pricier (119$) but they have a real customer service that can save your energy. Their servers are also more vigorous, so all in all the price is correct.

Virtual servers have one significant advantage: you can scale them up (or down) easily in case you need more (or less) horsepower. I had a personal bad experience with a slow virtual server a couple of years ago, so I have never retried yet. I recently had some positive feedback about a provider: DigitalOcean.

Another strategy, instead of choosing a more powerful server, is to increase the number of servers. There is no unique good way to do so, it all depends on your current architecture. You could have several full-stack servers with load balancing, separate the database from the rest, split backend and frontend… Contact me if you need help.

What about backend slowness related to user peaks?

There is a last topic we did not talk about: your website gets slower when too many users are crawling it. I plan to deal with this subject in details in a later article. But here are some quick ideas to fix it in the meanwhile:

- Use a CDN.

- Double check that every request is correctly cached by the CDN. Analyzing your server’s access logs with an appropriate tool can help, search for the most frequent requests.

- Fix every single 404 error (and any other 4** and 5** numbers) as they travel through CDNs or reverse proxies and hit your server.

- Use Load Testing to fake peaks on your development server. Or during the night on your production server. I can help on this kind of project too.

Note: This article contains affiliate links. I may receive a small commission if a purchase is made through these links.